Via @rodhilton@mastodon.social

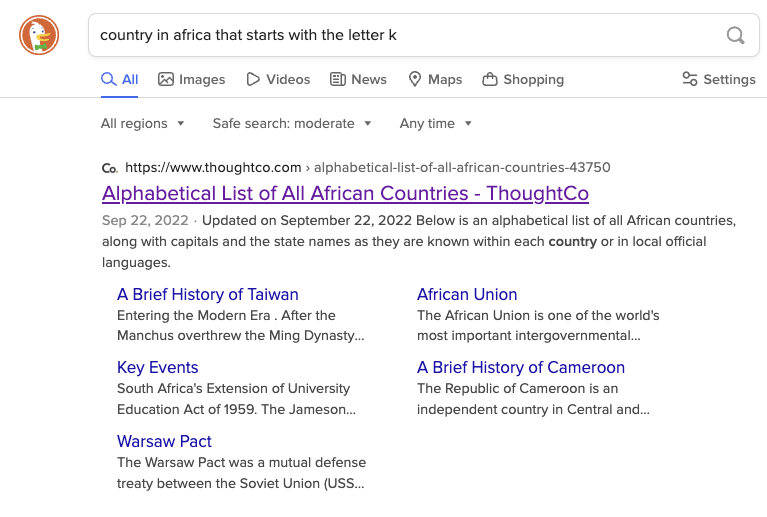

Right now if you search for “country in Africa that starts with the letter K”:

-

DuckDuckGo will link to an alphabetical list of countries in Africa which includes Kenya.

-

Google, as the first hit, links to a ChatGPT transcript where it claims that there are none, and summarizes to say the same.

This is because ChatGPT at some point ingested this popular joke:

“There are no countries in Africa that start with K.” “What about Kenya?” “Kenya suck deez nuts?”

AI is its own worst enemy. If you can’t identify AI output, that means AIs are going to train on AI generated content, which really hurts the model.

Its literally in everyone’s best interest, including AI itself, to start leaving identification of some kind inherent to all output.

Those studies are flawed. by definition when you can no longer tell the difference the difference on training is nil.

It’s more like successive generations of inbreeding. Unless you have perfect AI content, perfect meaning exactly mirroring the diversity of human content, the drivel will amplify over time.

Given chinchilla law, nobody in their right mind trains models via shotgun ingesting all data anymore. Gains are made with quality of data at this point, less than volume.