So it turns out that the cause was indeed a rogue change they couldn’t roll back as we had been speculating.

Weird that whatever this issue is didn’t occur in their test environment before they deployed into Production. I wonder why that is.

All companies have a test environment. Some companies are lucky enough to have a separate environment for production.

Change Manager who approved this is gonna be sweating bullets lol

“Let’s take a look at the change request. Now, see here, this section for Contingencies and Rollback process? Why is it blank?”

I’m sure there was a rollback process, it just didn’t work.

If you don’t test a restored backup, you don’t have a backup, same applies here.

It’s as if they were running Windows 10 Home on their servers and had an upgrade forced onto them

how else do you explain to the layman “catastrophic failure in the configuration update of core network infrastructure and its preceding, meant-to-be-soundproof, processes”

This happens in my business all the time…the test FTP IP address is left in the code and shit falls apart costing us millions… They hold a PIR and then it happens again.

SNAFU

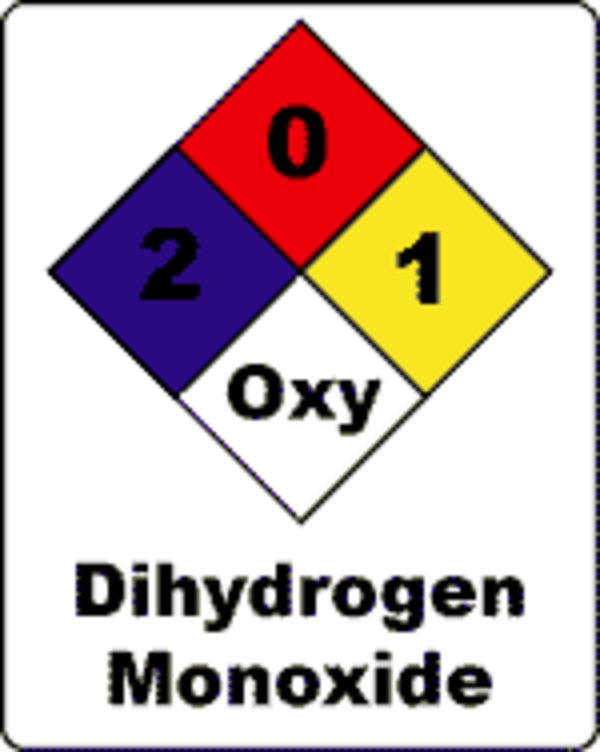

This should be their new logo. https://imgur.com/a/bdIUayH

Optus: Yes We Fucked Up

If this is how they do their routine updates, they have had an extremely lucky run so far. Inadequate understanding of what the update would/could do, inadequate testing prior to deployment, no rollback capability, no disaster recovery plan. Yeah nah, you can’t get that lucky for that long. Maybe they have cut budget or sacked the people who knew what they were doing? Let’s hope they learn from this.