TLDR: Laser printer, no AC, high humidity.

I live in Singapore, the environment here is hot, humid, pretty much all the time.

I’m lucky to live in a house with a good breeze, so I tend to keep the windows open and all the rooms, and rarely turn air conditioning on. When I do it’s only for the room I’m in.

My servers and printer, are in a room with no air conditioning usually, this hasn’t been a problem historically. But I just got a brother laser printer, and one of those unpacking it I noticed it had a massive desiccation packet packed inside the printable area.

I wonder if people have had any issues in high humidity environments, with either their electronics, or a laser printer?

When I used to live in an area with a heavy ocean sea breeze, I had electronics that rusted from the inside from the high salt content. So I do wonder.

I have noticed paper I leave in a high humidity environment tends to become less rigid over time.

Anecdote time: I’m an IT dude for the offshore industry. I deal with production server clusters on board ships, and I usually show up when we’re mobilizing to make sure the system is ready to go. In europe this is mostly smooth sailing, pun only partially intended.

Back in april I showed up in Singapore two weeks before anyone else, because there was some maintenance I needed to do beforehand, mostly down to setting up some huge RAID volumes from scratch, and (luckily) restoring some stuff from a backup. This mostly would involve a lot of waiting at the hotel while routinely checking in via VPN.

These production clusters consist of four servers mounted in an airconditioned rack, which in turn is mounted in an air conditioned shipping container. This way this system can be used all over the world without much adaptation needed.

When preparing to do this maintenance I did what I always do at the start: connect power, but leave the UPS off, just keeping the AC running. I then grabbed a Grab taxi and went to Sim Lim tower for some odds and ends that I needed, with the intention of just letting the AC do its thing for a few hours.

Upon returning after some shopping, geek browsing, and lunch, I checked that the temperature and humidity in the rack was fine. I then proceeded to power up the stuff. Network; fine. Both 10gig and 100gig networks. Various purpose made proprietary hardware was also fine. I then proceeded to power on the servers, and that’s when the issues started; the first one didn’t power on. “Huh, odd”, I thought. Not too alarming as I was doing it remotely over LAN, and this sometimes croaks and can often be resolved by flashing the firmware on the management interface. So I went for the button instead; nothing. “Shit…”.

I always keep a spare server with the systems for cases like this, so I started manhandling these 70Kg beasts, getting the fauly one out and the spare in, swapping over all the drives and after powering it and importing the RAID arrays it worked as intended. The most important server was up and running.

2nd server, almost as importantas the first one: same issue. “Shit, that was my one spare”. So i started troubleshooting, swapping around CPUs to make sure they weren’t the issue (each server has two, and can run with just one, so unlikely).

After spending an entire day checking all combinations I concluded that I had two faulty motherboards on my hands, despite them working fine before it was shipped from europe. I called my vendor and to my luck, they had a motherboard of the right type in store. Only problem was that it was on the other side of the planet.

I then phoned up a coworker of mine. I knew he was flying in the day after, so I offered him free beer on arrrival if he could drive for three hours to the vendor and pick up my motherboard, and then handcarry it around the world to me.

Luckily the 3rd and 4th server powered fine, so thebreraid operation could churn along while I waited for spares. My coworker arrived with the spare a few hours before I needed everything working, and there was a frantic scramble of tools, personnel and hardware to get the last machine operational. Swapping motherboards in these servers is bloody annoying - imagine swapping motherboard in a normal PC, but with 10 times as much hardware populating it.

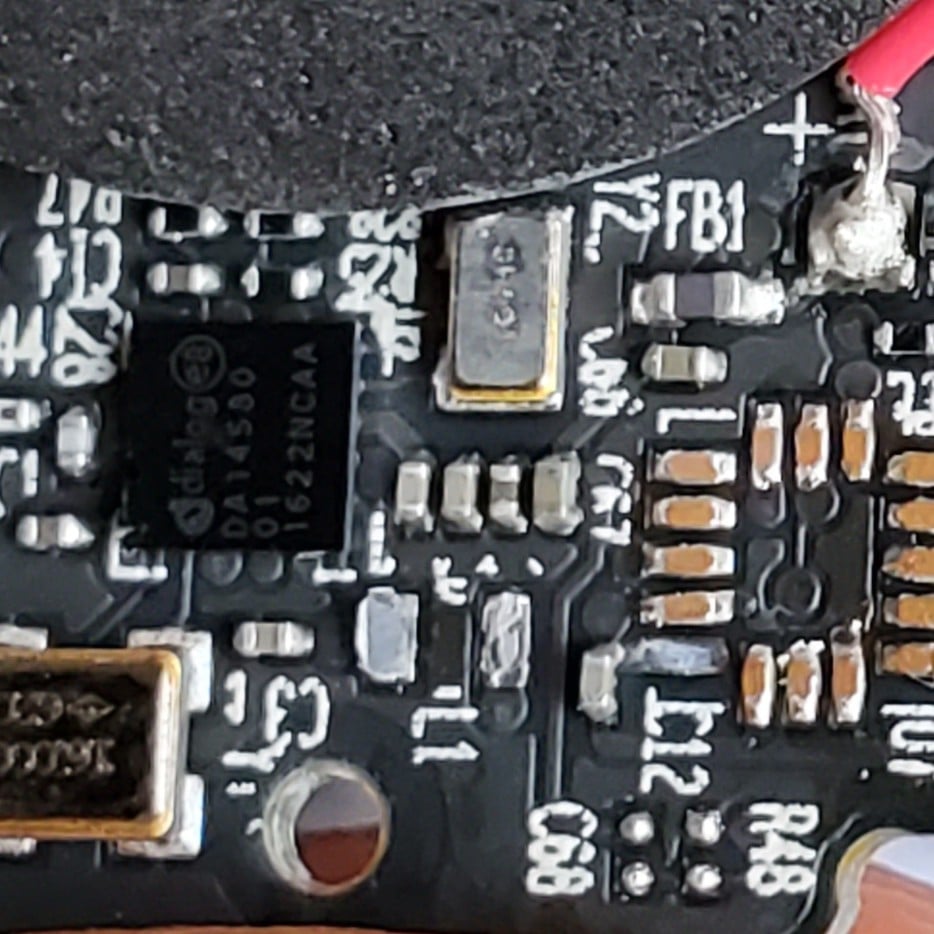

Finally, an hour before the ship was about to sail, everything was powered and ready to go. Investigation indicated that even though the air measured fine, there were most likely too much humidity inside the two servers at the bottom. Moral of the story: electronics don’t like Singaporean climate.

After a stressful week I finally got to properly unwind by overdosing on Burnt Ends and Shiner Bock at Decker BBQ that night.

So what’s the new run book for starting your skiffs in other countries? Hydrometer readings in the chassis before applying power?

Great storytelling by the way very gripping. I was enthralled the entire time

Most important servers go on top (we can run relatively fine without one of them, but with reduced capacity, as long as the cluster primaries work) and run the AC for longer during mobilization (a full day and overnight) Also, this utility hatch had a broken gasket and loads of bolts missing. This has now been fixed to make sure the container is reasonably air tight.

I’ve also considered some sort of heater in the bottom of the rack to prevent condensation during the initial AC cycle.

If you had been able to get the humidity down, before applying power, do you think the servers would have been okay?

Maybe. It could be that the damage was already done, as the container spent 6 month being slow shipped around the world. That utility hatch was basically venting in sea breeze continuously during transit.

I have had issues with the paper not feeding well and jamming as a result of high humidity in a copier/printer - but this was a cool/cold high-humidity site in the UK.

That was resolved by fitting an optional heated paper drawer.

fitting an optional heated paper drawer.

Thats a new one in deed

Yes. I had no idea they existed until we had the problem and looked into it.

I never thought of that!

https://all3dp.com/2/heated-3d-printer-enclosure-heater/

Googling for, and seeing the 3D printer enclosures, that could work for a normal laser printer. It’d be much cheaper than air conditioning the entire room!

You can probably even omit any heating and just use those desiccant sacks. They can be reused.

Laser tech here. Humidity is baaad news for laser. Beyond the higher water content of the paper causing massive page curl and jamming, past the humidity causing the toner to clump and rendering toner transport parts useless, you can even see fogging on the mirrors and lenses of the laser unit.

That being said, I hail from Arkansas, which is basically a swamp filled with bigots. If printers work here, you’ll be fine. Keep some silica packets in the paper drawers and where you store extra paper. Big thing, like 3D printers, you want to avoid drastic temp/moisture changes. It can cause all kinds of inconsistencies and even premature fuser failure. Have fun!

Swamp filled with bigots. I like that.

deleted by creator

I work in automation. We had one site in Florida that despite AC pumping all the time, had humidity issues. Electonics seemed to handle it ok, but paper was another story. This site would have to print information packs to get packaged up with the product, and it was a nightmare with the collating and folding automation, it just never wanted to work properly. The printers themselves would jam and have more issues than they should as well.