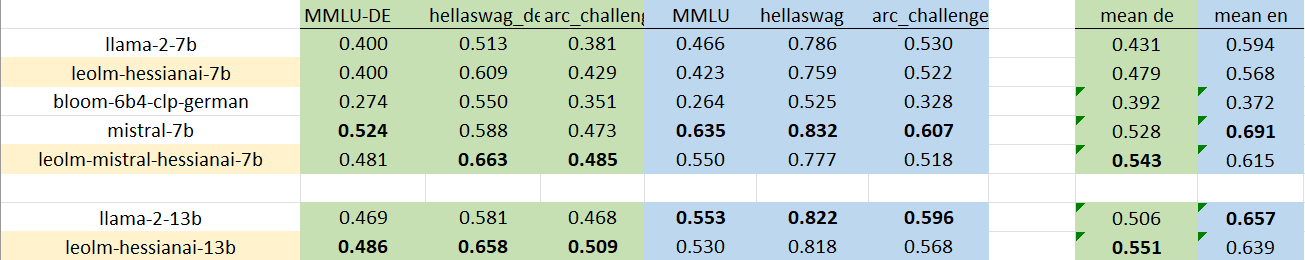

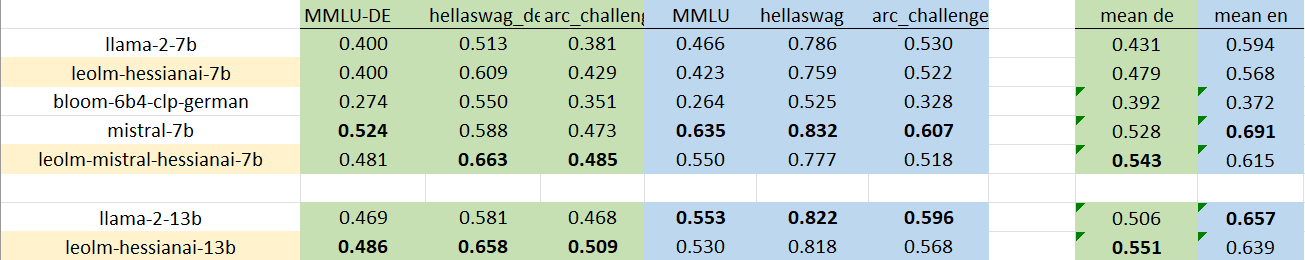

Our models extend Mistral’s capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text.

Other fine-tuned models for foreign languages:

Our models extend Mistral’s capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text.

Other fine-tuned models for foreign languages:

Thanks for the suggestion. Somehow I fail to reproduce your results. How do you do it? I’ve tried it with Oobabooga’s WebUI (for now):

I’ve tried AutoGPTQ. AutoGPTQ won’t like me to install it without CUDA. I got around that but now it says:

TypeError: mistral isn’t supported yet.(It also errors out with a different error message if I try Llama2.)

I’ve then switched to Transformers. With the GPTQ-version of the model it says:

RuntimeError: GPU is required to quantize or run quantize model.Then I deleted the quantized version and downloaded the whole thing. It takes 9 minutes with my internet speed.

- Loaded the model in 538.26 seconds.On inference:

UserWarning: The installed version of bitsandbytes was compiled without GPU support. 8-bit optimizers, 8-bit multiplication, and GPU quantization are unavailable.Loading it without quantization makes Oobabooga just sit there, pretend to do something but nothing happens. I somehow also doubt you’re getting performance out it this way. Doing inference on a CPU is memory-bound. And I seriously doubt it’s getting as fast now that I’m squeezing 15GB of data through the memory bus instead of like 4GB. So I’ve now stopped fiddling around with it.

Do you use it on CPU? If yes, do you use quantization? Which one? I’d like to try myself. But I don’t wan’t to try all the possibilities to find out which one works.

Disagree. Georgi Gerganov seems to be a crazy dude and on fire. So is the whole community behind the project. Regarding CPU inference and quantization, they were lightyears ahead of Huggingface, which just managed to get proper quantzation in recently. As far as I understand GPTQ isn’t as elaborate as the quantization ggml does. There is AWQ but that doesn’t seem to be a part of Transformers and the usual pipeline. It’s the same thing with all the other major advancements I’ve witnessed. I think llama.cpp had RoPE-Scaling first and several other things. They can do nice things like reuse the KV cache and avoid recalculating the whole context…

Something like StreamingLLM, the sliding window attention are worked on in both | projects.

For the last few months it used to be the way: once I read a potentially infuential paper, someone had already opened an issue at llama.cpp’s github page. It get’s on the roadmap and done eventually or you just wake up the next day and some crazy person had pulled an all-nighter and implemented all the maths for it.

Whereas progress with HF Transformers seemed slow. It either supported a specific use case or I had to spend several hours getting some fork with lots of custom code from the researchers’ github working.

And I remember fighting with GPTQ, the different branches for triton, cuda kernels and stuff, incompatible models and you needed to pull exactly the right commit or nothing would work.

With llama.cpp or KoboldCpp in my case, it’s just

git pull; make clean && make LLAMA_OPENBLAS=1 LLAMA_CLBLAST=1and you go. No gigabytes of pytorch and python modules with platform specific and sometimes incompatible binaries/wheels attached. But you’re right. If you’re only using HF Transformers for one of its well supported use cases, without a complex project around it and using the same hardware as everone else does, it’s easy. And you’re right. I’ve written a small wrapper and the code to use the AutoSomethingForSomething and do inference fits in a few lines. But so does the example C code for llama.cpp.Do they (HF) really implement things like QA-LoRA or newer stuff we’ve talked about 2 weeks ago quickly? I’m not sure. To me it seems more like implementations come from externally and things get included at some point, once enough people use/request it. They aren’t really using their resources to ‘keep up’ in my observation. More following what happens. But they do great things, too. I don’t want to badmouth them. We wouldn’t be where we are, without HF and Transformers in specific. It was there years before GGML was a thing, and all the researchers and projects build upon Transformers. And once you do more than just simple inference, you’re probably going to use it.

But having some example code along with a new paper only gets you so far. Once you want to incorporate those new advancements into some of your projects and really use it, it get’s difficult. All the custom additions and the old forked and customized dependecies that the researchers did when they started working on it, makes it difficult to do anything with it without dissecting the whole thing.

TL;DR You’re right, pytorch and transformers need more memory.

I will respond to the CPU inference first, for the transformers library.

In transformers, I don’t use quant. :L If you’re used to the Q_4 speed, then it will be slower than that. For a 7B it’s almost okay on CPU.

Yeah, it seems like you use low quant downloads. D: It’s not for you.

But you were on the right track with that 15GB download because you downloaded the raw release. Since not the GPTQ, nor AWS what we use in transformers (for new releases). ^^

That’s why I prefer c/rust code, it just works. It will always be faster whatever HF will release, with or without quant.

Right, C/Rust code is more optimized.

Pytorch w/o Nvidia card is less common >:D That’s how I started.

Imho most github sources release buggy code, they do not set device(‘cpu’) for cpu users. Avoiding dependency hell is a must. I prefer a commented single file, not a complex python project that spits out “bitsandbytes” errors.

So as HF, in their cough code cough. It is likely that the same code in C is also more readable.

The reason I mentioned transformers because this line takes care of new model releases with all the bugfixes, just as ***cpp projects do.

generator= pipeline('text-generation', model='NousResearch/Nous-Capybara-7B') generator('Here is the prompt.')We run out of context?! Fix that. Use rotary embedding.

model = AutoModelForCausalLM.from_pretrained( "NousResearch/Nous-Capybara-7B", rope_scaling={"type": "dynamic", "factor": 2.0}, ) generator= pipeline('text-generation', model=model) generator('Here is the prompt.')Does it eat all your RAM? It does. It just works ™ with fineprint.

How to train with tools? Download another tool! With transformers:

model = AutoModelForCausalLM.from_pretrained( "NousResearch/Nous-Capybara-7B", use_flash_attention_2=True, ) trainer = SFTTrainer( model, train_dataset=dataset, dataset_text_field="text", max_seq_length=512, # context size is 512 ) trainer.train()Does it eat all you RAM? Yup, it goes beyond 64GB.

peft_config = LoraConfig(r=16, task_type="CAUSAL_LM") trainer = SFTTrainer( model, train_dataset=dataset, dataset_text_field="text", peft_config=peft_config )Now it eats less RAM with Lora.